Google Gemini 2.0 announced with multimodal image and audio output, agentic AI features

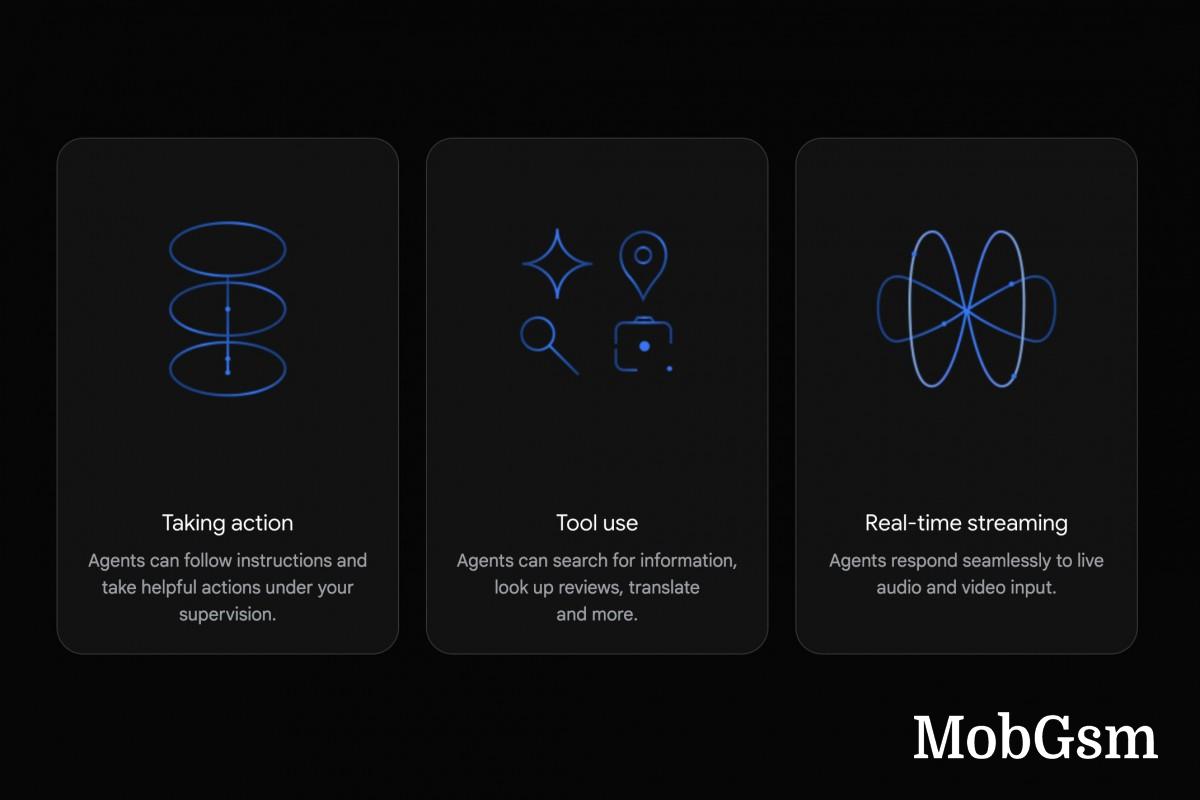

Google unveiled Gemini 2.0 – the latest generation of its AI model, which now supports image and audio output and tool integration for the “agentic era”. Agentic AI models represent AI systems that can independently accomplish tasks with adaptive decision-making. Think automating tasks like shopping or scheduling an appointment from a prompt.

Gemini 2.0 will feature multiple agents that can help you in all sorts of fields, from providing real-time suggestions in games like Clash of Clans to picking out a gift and adding it to your shopping cart based on a prompt.

Like other AI agents, the ones in Gemini 2.0 feature goal-oriented behavior. They can create a task-based list of steps and accomplish them autonomously. Agents in Gemini 2.0 include Project Astra, designed as a universal AI assistant for Android phones and with multimodal support and integration of Google Search, Lens and Maps.

Project Mariner is another experimental AI agent that can navigate on its own within a web browser. Mariner is now available in early preview form for “trusted testers” as a Chrome extension.

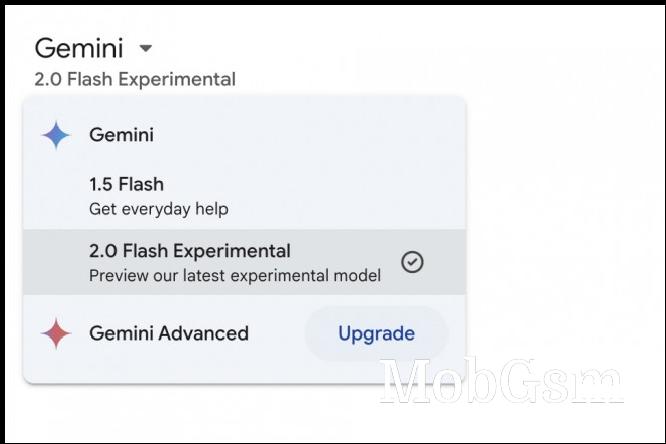

Outside of the AI agents, Gemini 2.0 Flash is the first version of Google’s new AI model. It’s an experimental (beta) version for now with lower latency, better benchmark performance and improved reasoning and understanding in math and coding compared to Gemini 1.0 and 1.5 models. It can also generate images natively powered by Google DeepMind’s Imagen 3 text-to-image model.

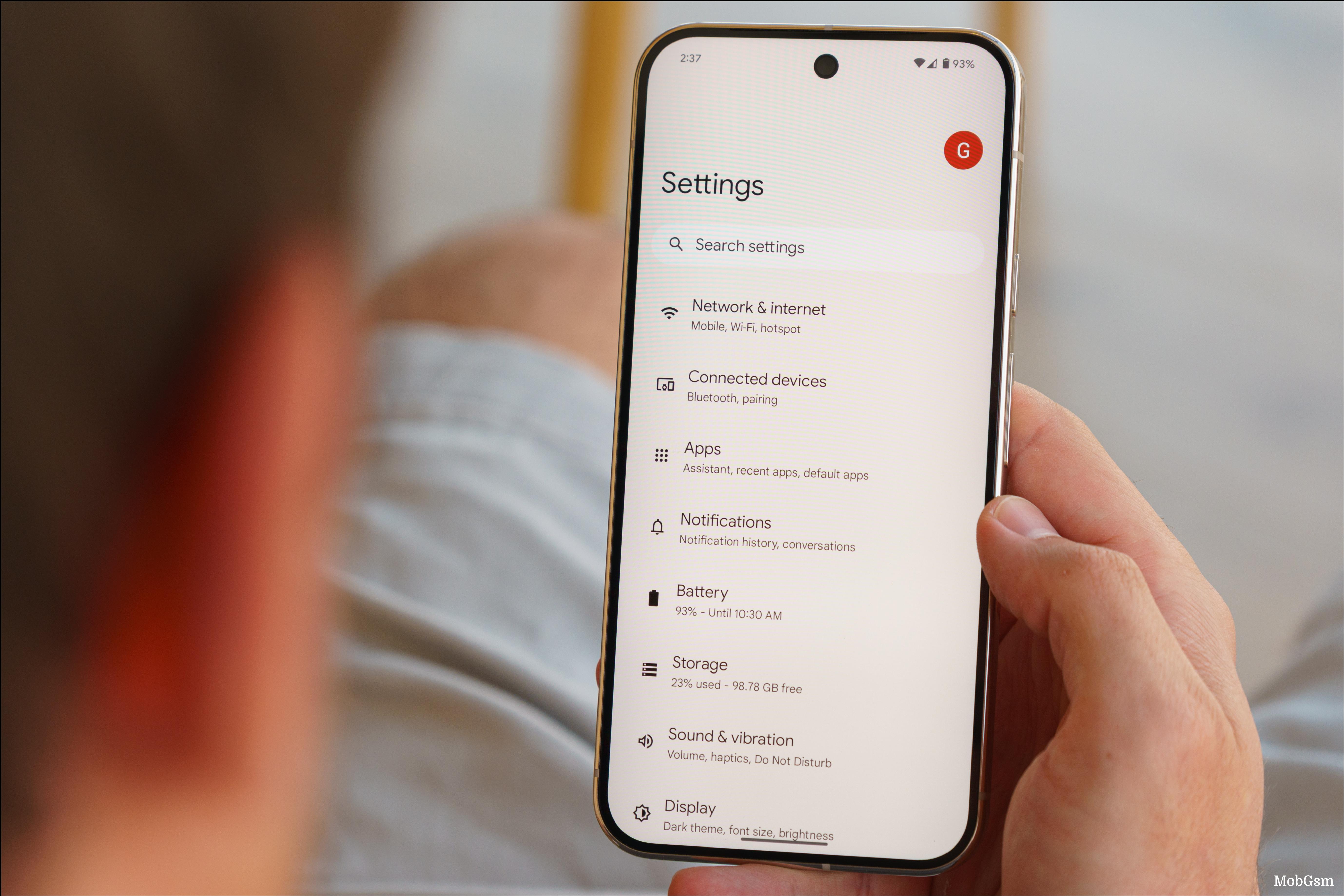

Gemini 2.0 Flash Experimental is available on the web for all users and is coming soon to the mobile Gemini app. Users who want to test it out will need to select the Gemini 2.0 Flash Experimental from the dropdown menu

Gemini 2.0 Flash Experimental on web

Developers can also access the new model via Google AI Studio and Vertex AI. Google also confirmed it will announce more Gemini 2.0 model sizes in January.