Understanding HDR10 and Dolby Vision

One of the most popular buzzwords today in the world of technology is HDR. Everywhere you go, you see televisions, video streaming services, game consoles, and even smartphones advertising that they are HDR compatible.

Introduced back in 2015, HDR was meant to go beyond the unimaginative resolution bumps that characterized most of the video improvements at that point and do something more meaningful. Instead of just having more pixels, the goal here was to have better pixels. The focus was on improving the dynamic range by allowing a greater variation in the light output levels while also allowing a wider range of colors.

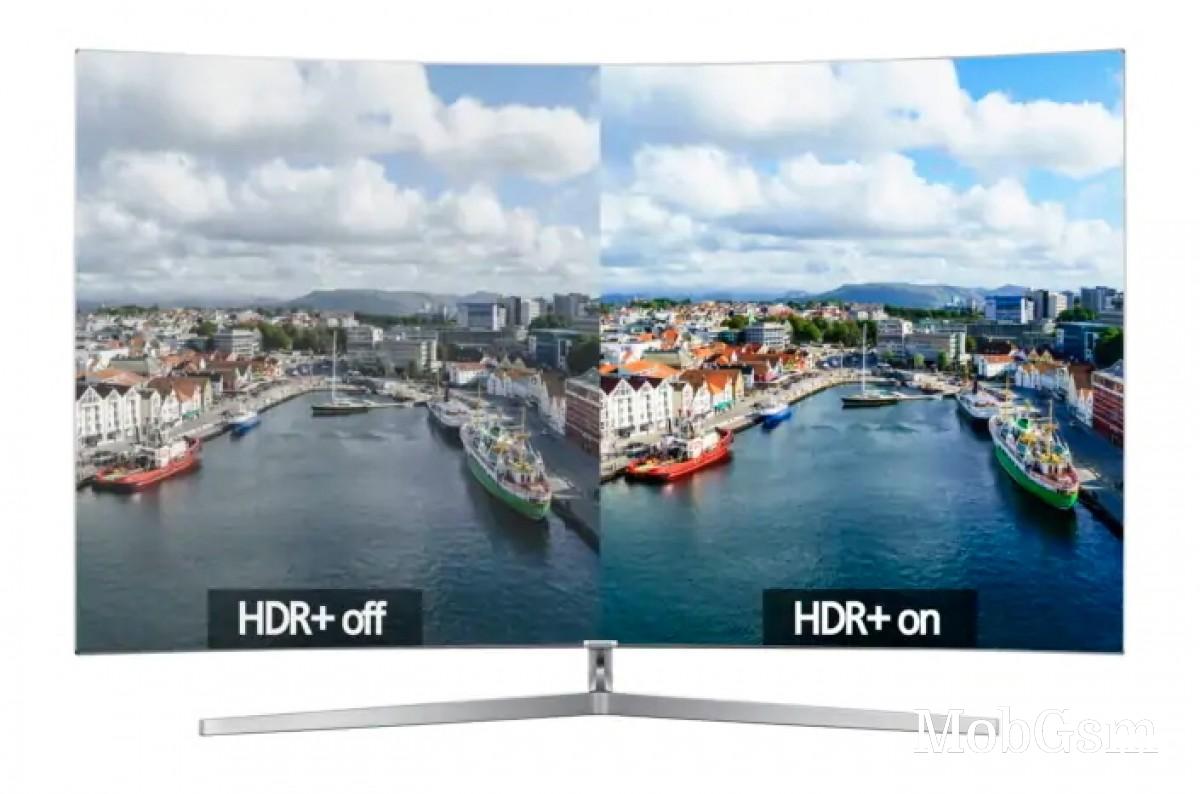

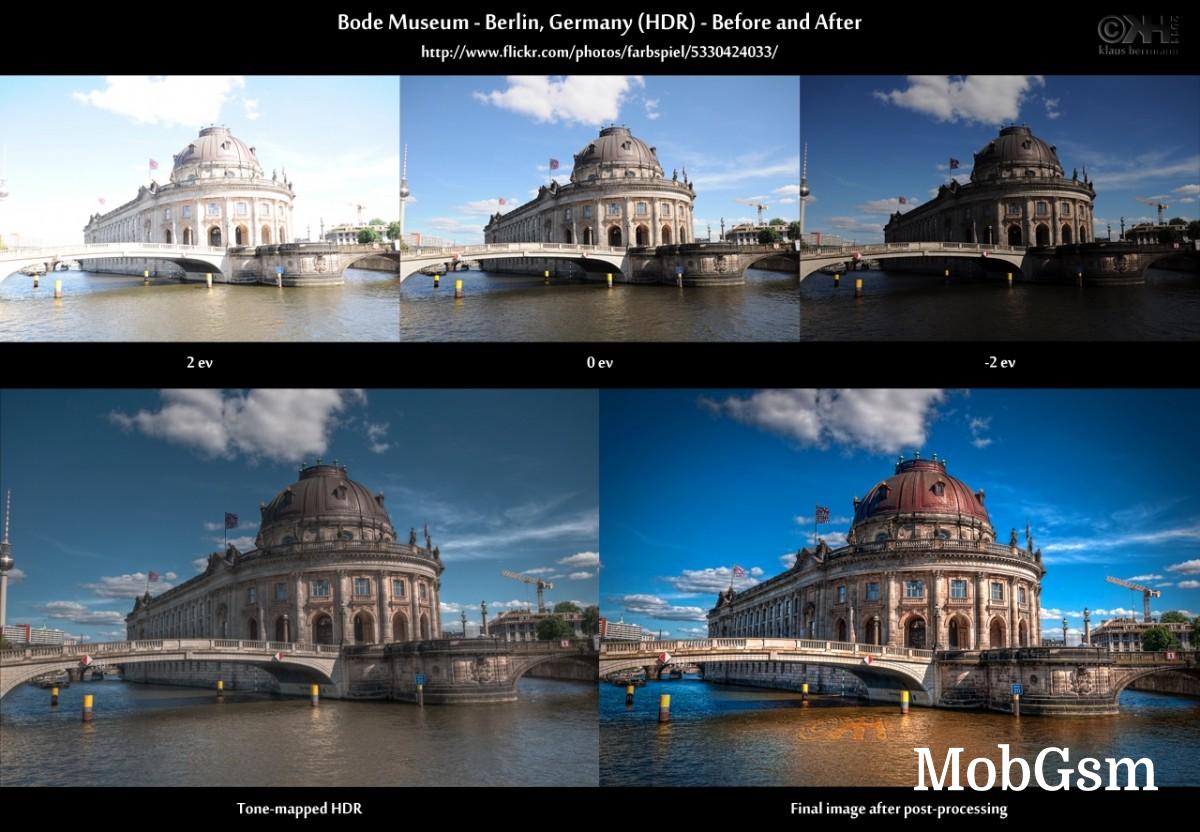

Don"t we all just love seeing HDR comparisons on our SDR screens?

Don"t we all just love seeing HDR comparisons on our SDR screens? HDR has since then grown quite a lot in popularity, with manufacturers latching on to yet another thing that they can now sell with an easy to market acronym. Along with that we also got the now trite phrase of "brighter whites, darker blacks". But if you ask the average person what they actually understand from this phrase or any of the marketing surrounding HDR and its various formats, chances are they wouldn"t really know.

So today we try to demystify some of the air around HDR by trying to explain what it means, how it differs from the regular old "SDR", how it is made, what even is a Dolby Vision, if it"s related, and more importantly, should you even care about it.

Dynamic range

If we want to understand HDR or High Dynamic Range, we first need to start with the basics, and that is the dynamic range part. The dynamic range of something is the difference between the lowest and the highest values it can produce.

While used in a variety of contexts, in this article we will only discuss dynamic range as it pertains to displays and cameras. For displays, the dynamic range is the difference between the brightest and dimmest light it can produce. For cameras, it"s the brightest and dimmest light it can capture at any given setting.

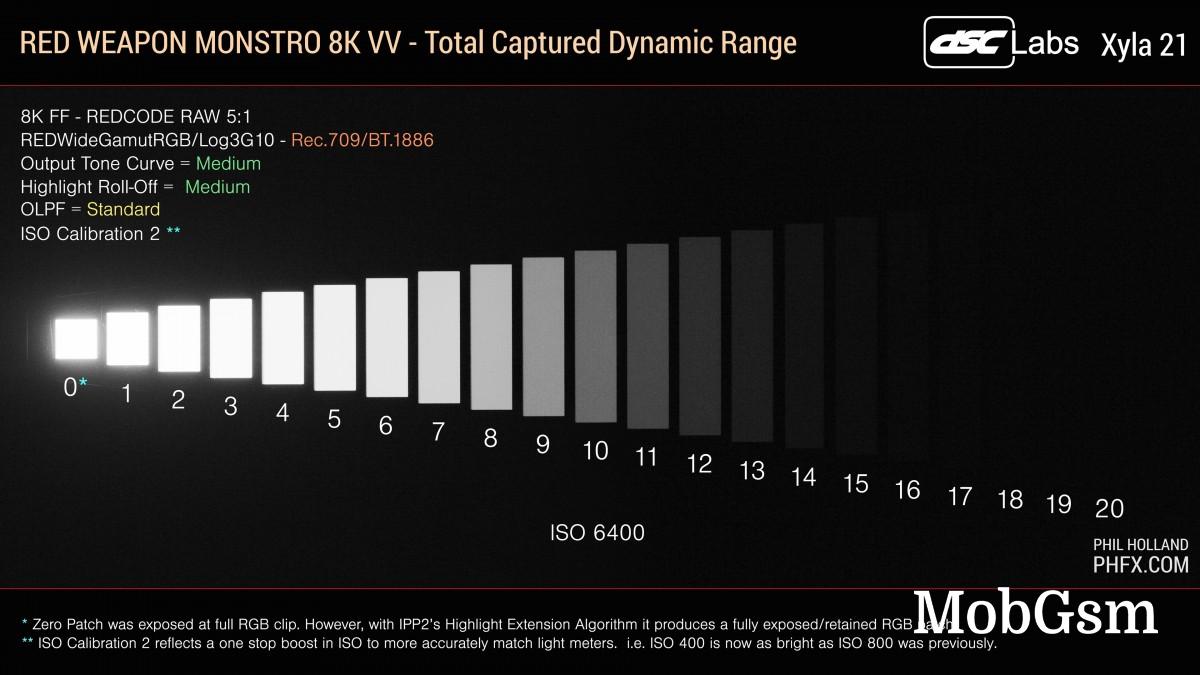

The dynamic range of a RED Weapon Monstro 8K VV is around 18 stops.

The dynamic range of a RED Weapon Monstro 8K VV is around 18 stops.The dynamic range for luminance values is usually measured in terms of stops, which isn"t an absolute unit but rather the doubling of the light output values. With every doubling of light, you get another stop of dynamic range.

To have a high dynamic range video experience, you need end to end support. This means from the camera capturing the content to the editing suite encoding it, to the delivery format and finally, your television, all of them need to support HDR otherwise the process is compromised and the final result will either not be HDR or won"t provide a good experience.

Standard dynamic range

Your typical digital video, or what has now come to be known as standard dynamic range video, has a lot of parameters defining it. Let"s take the example of the video you get on a standard HD Blu-ray disc.

The video on most HD Blu-ray discs is a standard H.264 8-bit 4:2:0 file. H.264 is the codec and is one of the most commonly used video formats in the world. 8-bit is the bit depth, where each of the R-G-B primaries are allocated 8-bits of color information, leading to a total of 24-bit color information. The number of colors for a particular bit depth is 2n, where n is the bit depth so a 24-bit signal can have up to 16.77 million colors.

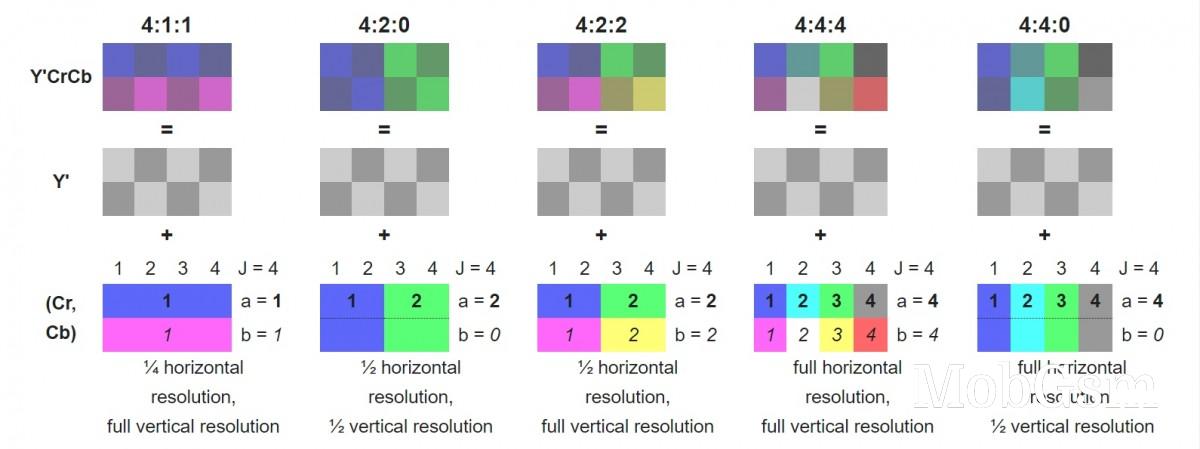

Image credit: Wikipedia

Image credit: Wikipedia4:2:0 is the chroma subsampling, which is a way of compressing by reducing the color information. 4:2:0 has half the horizontal and half the vertical chroma resolution of a full 4:4:4 or RGB signal, which is fine for video content but not for computing use as text tends to get blurry at lower subsampling values.

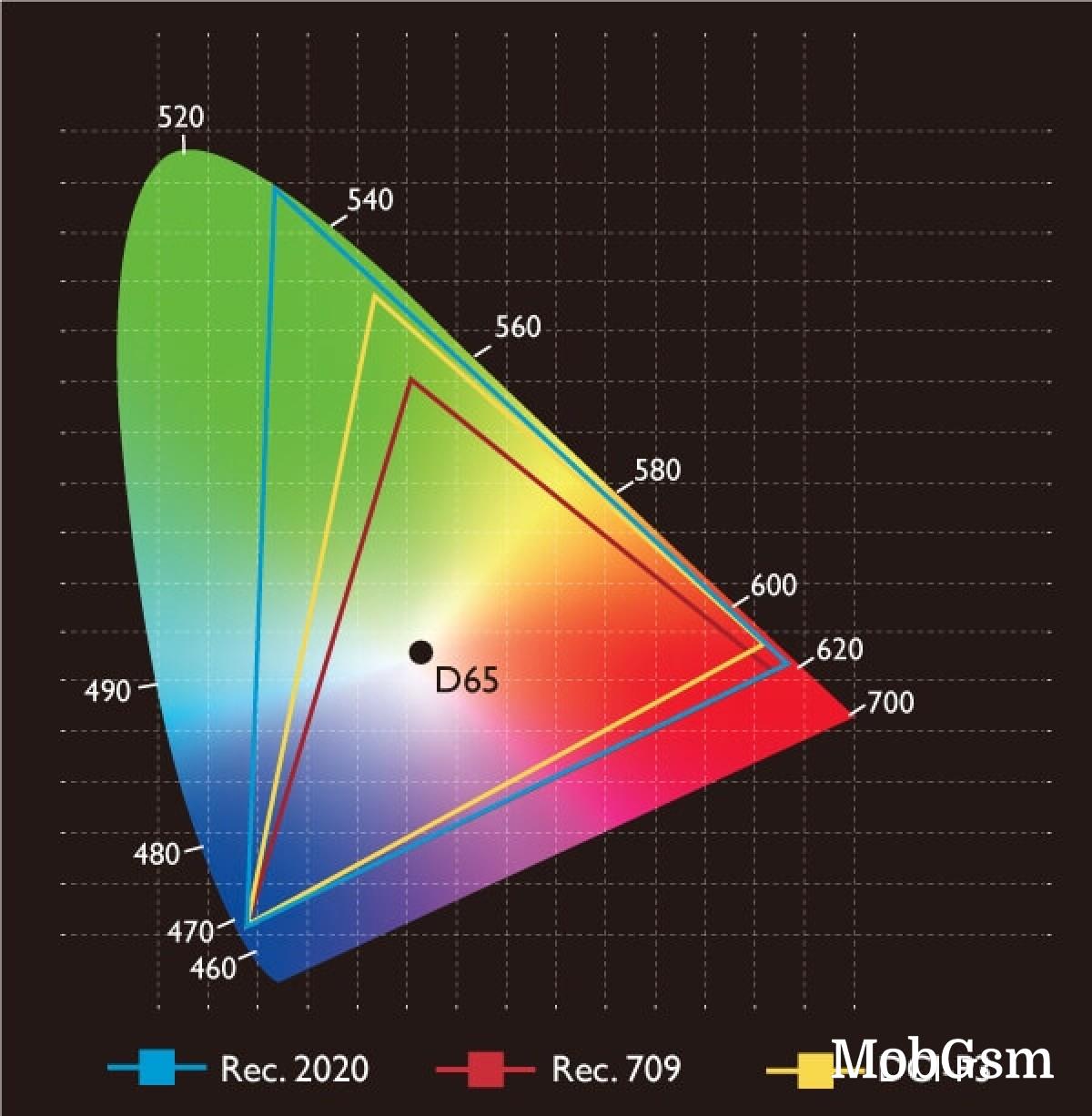

Rec. 709 color space as compared to Rec. 2020 and DCI-P3 within the visible color range

Rec. 709 color space as compared to Rec. 2020 and DCI-P3 within the visible color rangeNext is the color space. HD Blu-rays are mastered in Rec. 709 color space. This is roughly equivalent to the sRGB color space used for computers and the internet, with the same 6504K D65 white point. However, Rec. 709 has a gamma of 2.4 while sRGB has a 2.2 gamma.

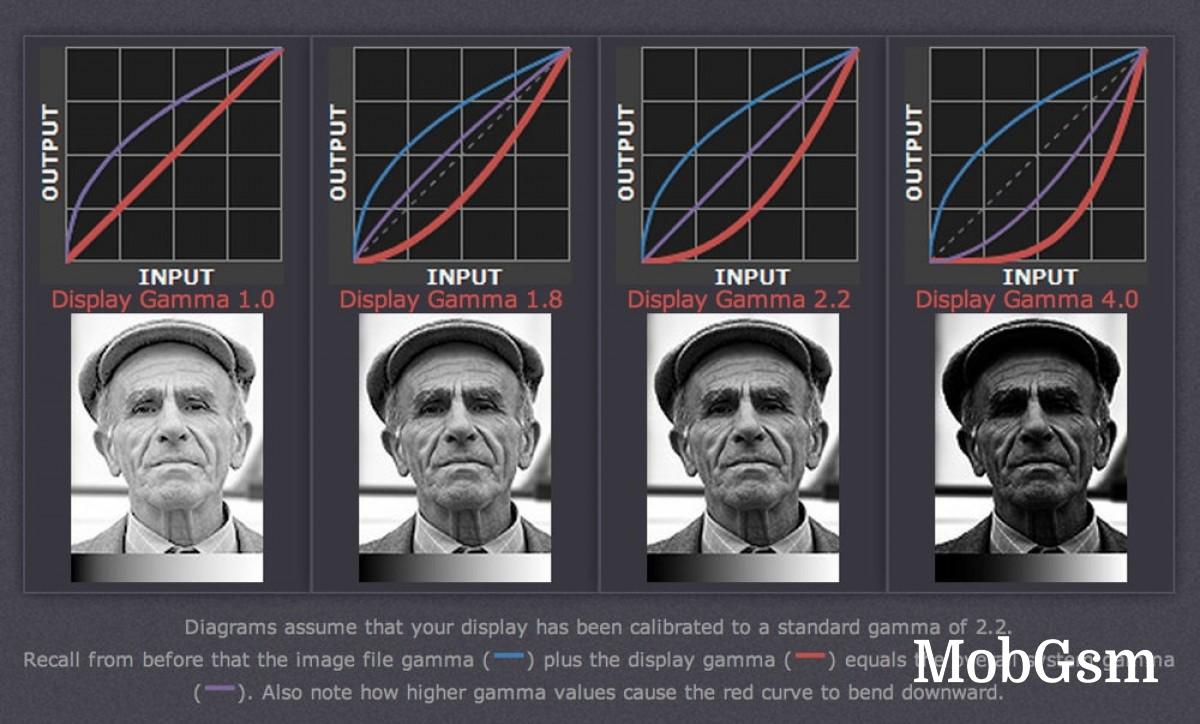

The color space defines the range of colors available. Each color can be mapped to a specific point in this 3D "space", which is defined by the range of visible colors to the human eye. Gamma is the electro-optical transfer function or EOTF for standard dynamic range video, which is a mathematical equation that turns the incoming electrical signals into visible color information. The gamma value broadly controls the luminance value of the colors in the content and changing the gamma value changes all the color values within that content. If you were to change the gamma value from 2.4 to 2.2, it would make the entire image brighter. Similarly, if a piece of content mastered at 2.4 gamma is viewed on a display calibrated to 2.8, then the entire image will look darker.

Finally, there is the max luminance. A standard dynamic range video is mastered at 100 nits of peak brightness. Colorists will have their SDR monitor calibrated to 100 nits in a room that is just a few nits above pitch dark and ensure the whitest whites don"t exceed 100 nits otherwise they"d just clip.

This limit on luminance is the greatest limitation of SDR video. While other factors like bit depth, chroma subsampling, and color space are variable and you can have higher values such as 10-bit, 4:4:4, Rec. 2020 in an SDR video, the luminance value will always be capped at 100 nits.

What this means is that there is a severe limit on the dynamic range of the content as the difference between 100 nits and 0 nits is only about 6 stops, which isn"t much. This results in very little contrast in the image as the brightest values can only be so much brighter than the darkest values.

This difference isn"t sufficient to produce lifelike content. When you step outside on a sunny day, you can have objects reflecting light in tens and thousands of nits. The sun itself is outputting light over a billion nits. Our eyes can"t even successfully see all of that dynamic range, which is why our iris narrow down in bright light and open wide again in the shadows. But we can still see bright objects, such as a white car glistening in the sun, as being ultra-bright, which is exactly what has been missing from televisions, which can come nowhere near that brightness.

Sure, you can make an SDR display brighter than 100 nits and most of them are. But that doesn"t increase the dynamic range or the contrast of the display but rather the brightness of the entire image, including the shadow information. To reproduce real-world conditions accurately, we need displays and content that can get bright enough in the highlights while still maintaining the light levels of the shadow areas.

High dynamic range

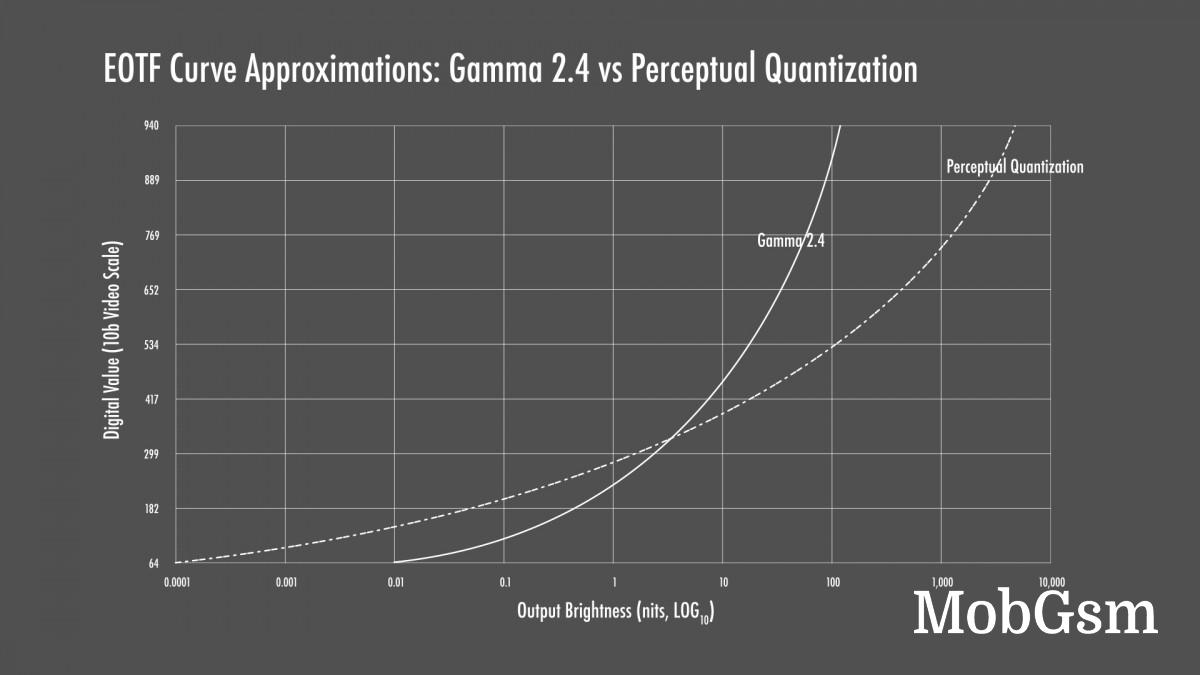

This is where high dynamic range comes in. The main advantage of high dynamic range video is the increase in the peak luminance value and it achieves this with the help of a new electro-optical transfer function called Perceptual Quantizer, also known as SMPTE ST 2084. This transfer function allows HDR video to have luminance values as high as 10,000 nits. HDR also has a lower black point of 0.0001 nits compared to the 0.01 nits black level floor for SDR, which allows more information in the shadow region before it clips to black. The result of this stretching of both the base and peak luminance value allows HDR content to have a massive dynamic range, which is simply impossible with standard content, allowing it to look much more realistic.

Unfortunately, the full 10,000 nits figure for highlights is out of the reach of existing display technology. The brightest mastering monitors that cost tens of thousands of dollars can go as high as 4,000 nits but most consumer televisions cannot exceed 1000 nits, with OLED models being even dimmer.

Image credit: Mystery Box

Image credit: Mystery BoxRegardless of existing limitations, the higher theoretical limits of HDR allows much more freedom when mastering content. Professional colorists and mastering engineers now have a much bigger canvas to paint their picture. An HDR video doesn"t necessarily mean the video is just brighter. In fact, if you look at most of the content today mastered in HDR, a lot of the video information would still be below 100 or 200 nits.

This is because not everything has to be too bright. The common misconception about HDR is that it makes everything brighter but that"s not the case. What it allows is additional headroom so, for example, if a scene is taking place inside a dark room but with a bright lamp somewhere in the frame, the room itself can be below 100 nits of brightness but the lamp can now be 800 nits or even 2000 nits if the director wants. What this creates is contrast, where the room is realistically dark but the lamp can cut through that darkness and shine brighter, just as it would in real life.

This much larger difference in light values is what HDR brings to the table and what SDR cannot achieve. Again, if you were to just make your display brighter on SDR, it would make the entire room brighter, which is not what we want. The goal here is contrast, not just brightness.

This technique can be applied to a lot of areas in the frame. Small bright areas, also known as specular highlights, can be assigned higher luminance values in the editing process so they shine brighter than the rest of the surroundings in a realistic manner. Again, the intent here is to have contrast, not just make things brighter just for the sake of it. If the coloring artist or the director feels like nothing needs to be brighter than 200 nits in a particular scene or even the entire film, they can choose to do that (and some do).

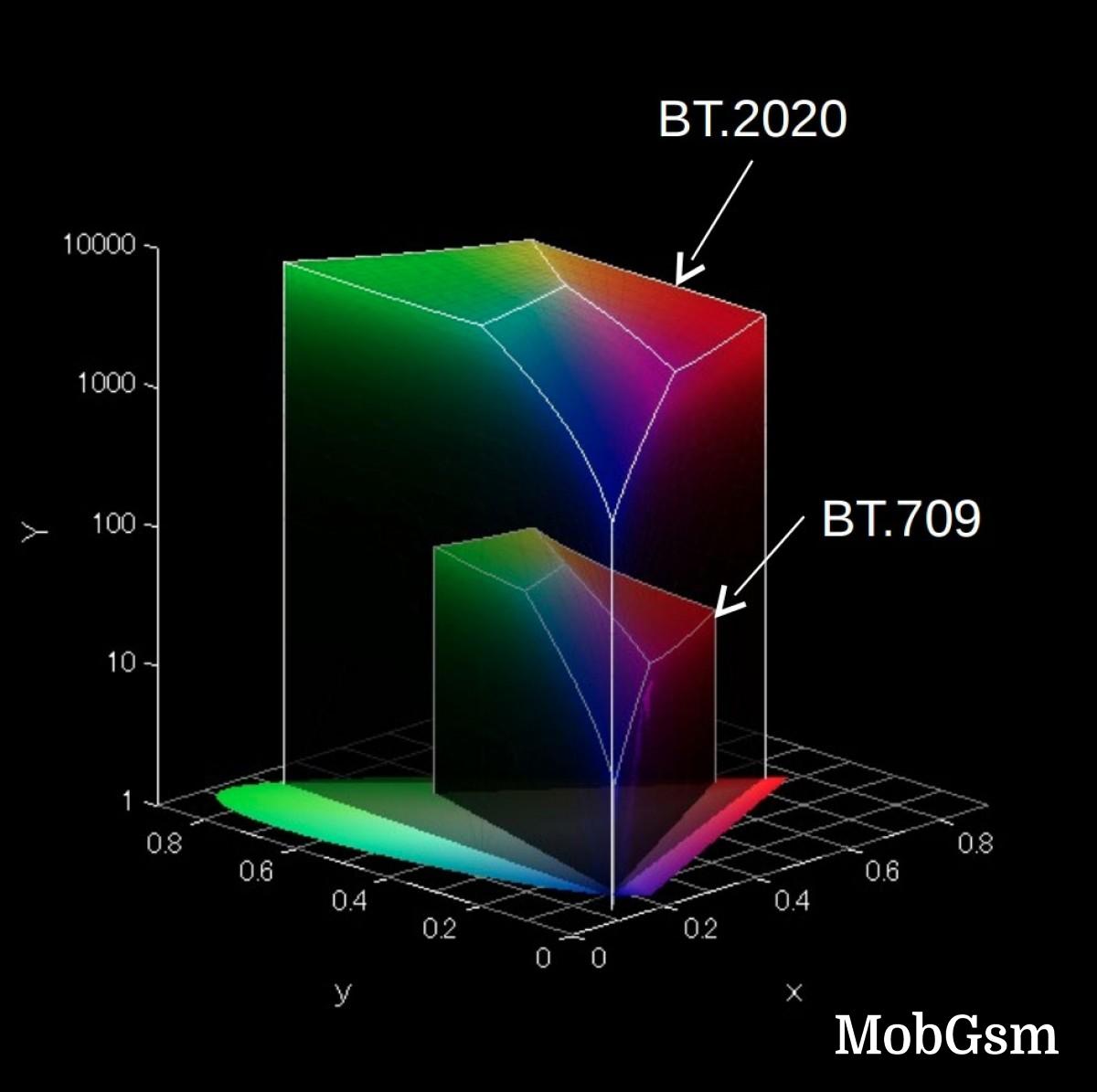

The wide dynamic range is only part of the story with HDR. HDR also has support for wide color gamut or WCG. While it is optional for standard dynamic range content, WCG is part of the HDR video format specifications, so you can always expect to see some use of wide color in your HDR content. HDR formats make use of the much wider Rec. 2020 color space, although the content within is often mastered to the smaller DCI-P3 color space as there aren"t many displays that can cover the full 2020 space.

Just like with the dynamic range, the presence of WCG doesn"t necessarily mean everything will have to look vibrant and oversaturated. WCG once again broadens the canvas and provides more color ranges for the artists to play around with when they are grading content for their HDR master. Most of the color you will see on-screen may still be within the Rec. 709 space but occasionally if an artist feels a particular shade of green that they are looking for falls outside the range of the 709 space, then they now have the option to choose from the much wider 2020 color set.

HDR content is also generally 10-bit or higher bit depth. The extra bits for every color channel results in less color banding and a more natural color gradation.

HDR formats

HDR currently has several different formats from different companies, but there are four that have gotten the most adoption.

The first is HDR10, which is the most standard form of HDR that you can get. If it"s HDR, it most likely is HDR10 or is at least acting as the base layer. Because of its standardization and wide-spread adoption, every HDR-capable device you purchase supports it. This makes it easy to find content for it and chances are whatever device you have will play it.

HDR10 is used as the default for UHD Blu-rays. Even discs that use other formats will have HDR10 as their base layer for backward compatibility. Streaming services like Netflix, Amazon, Hulu, YouTube, Vimeo, iTunes, and Disney+ all offer HDR10 by default for their HDR content, alongside whatever other versions they might have.

The ubiquity of HDR10 is its biggest strength and that"s largely to do with it being royalty-free. Technically, it is also a fairly strong format, supporting luminance values up to 1000 nits with Rec. 2020 WCG support and 10-bit color depth (hence the name HDR10). It"s main limitation, other than 1000 nits ceiling for max luminance, is the use of static metadata.

HDR10 uses SMPTE ST 2086 static metadata, which includes information about the display on which the content was mastered. It also includes information such as Maximum Frame Light Level (MaxFLL) and Maximum Content Light Level (MaxCLL). This information is used by the receiving display, such as your television, to adjust its own brightness for the content. Unfortunately, these values remain static throughout the runtime for HDR10 content, which produces less than ideal results wherein some scenes aren"t as bright as they can be while others could be brighter than they needed to be.

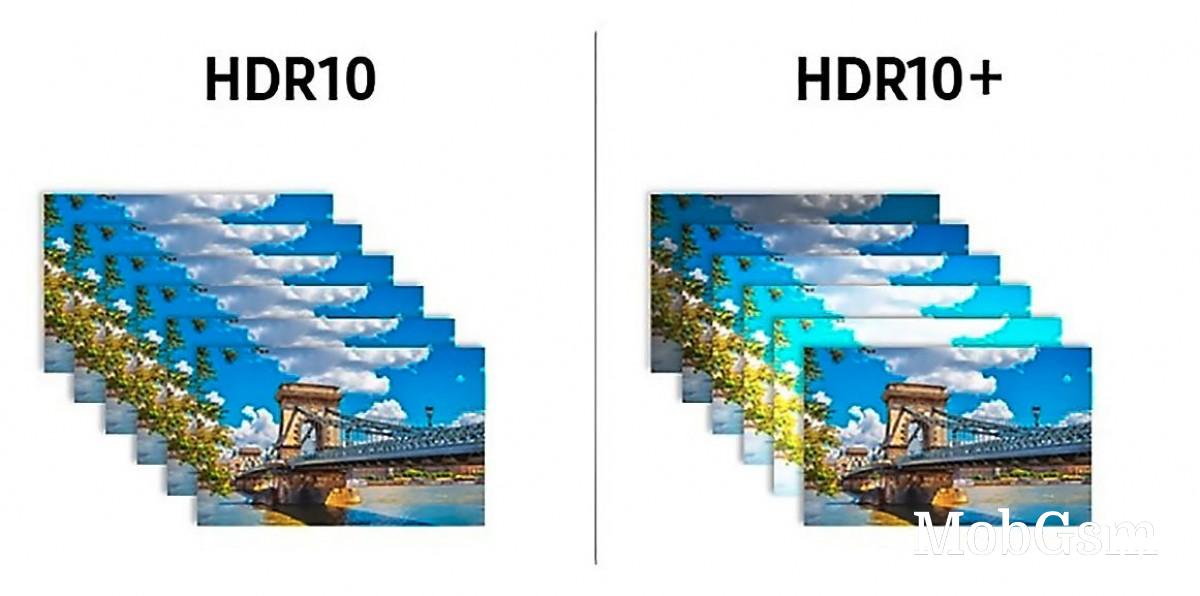

HDR10+ solves many of these issues. Created jointly by Samsung, Panasonic, and 20th Century Fox, the format has much of the same specifications as HDR10 but increases the maximum luminance value to 10,000 nits and supports dynamic metadata.

With the help of dynamic metadata, the colorist can include information on a scene by scene or frame by frame basic for adjusting the content light level. This information can then be used by your television to adjust its light output to match the content exactly, reproducing the creator"s intent more closely. The higher luminance values also allow televisions capable of achieving higher brightness than 1000 nits to stretch their legs instead of clipping at 1000 nits.

HDR10+ content is backward compatible with HDR10 devices, as most of the differences are contained within the metadata, which can be ignored by non HDR10+ displays. This will result in an experience similar to just watching an HDR10 video.

While HDR10+ claims to be royalty-free, there is a yearly administration fee, which is anywhere from $2500 to $10000 based on the product that is incorporating the technology.

HDR10+ hasn"t seen much adoption despite its technical advantages over HDR10. This includes adoption from content creators, service providers, as well as equipment manufacturers. The only companies trying to push it are either the founders (Samsung, Panasonic) or the companies in the HDR10+ alliance (Amazon). Even among those companies, some like Panasonic have now started offering rival technologies like Dolby Vision on their television. 20th Century Fox has also now started offering its UHD discs in Dolby Vision since Disney took over them. At this point, it"s not clear if HDR10+ has a future or if it goes the way of HD-DVD.

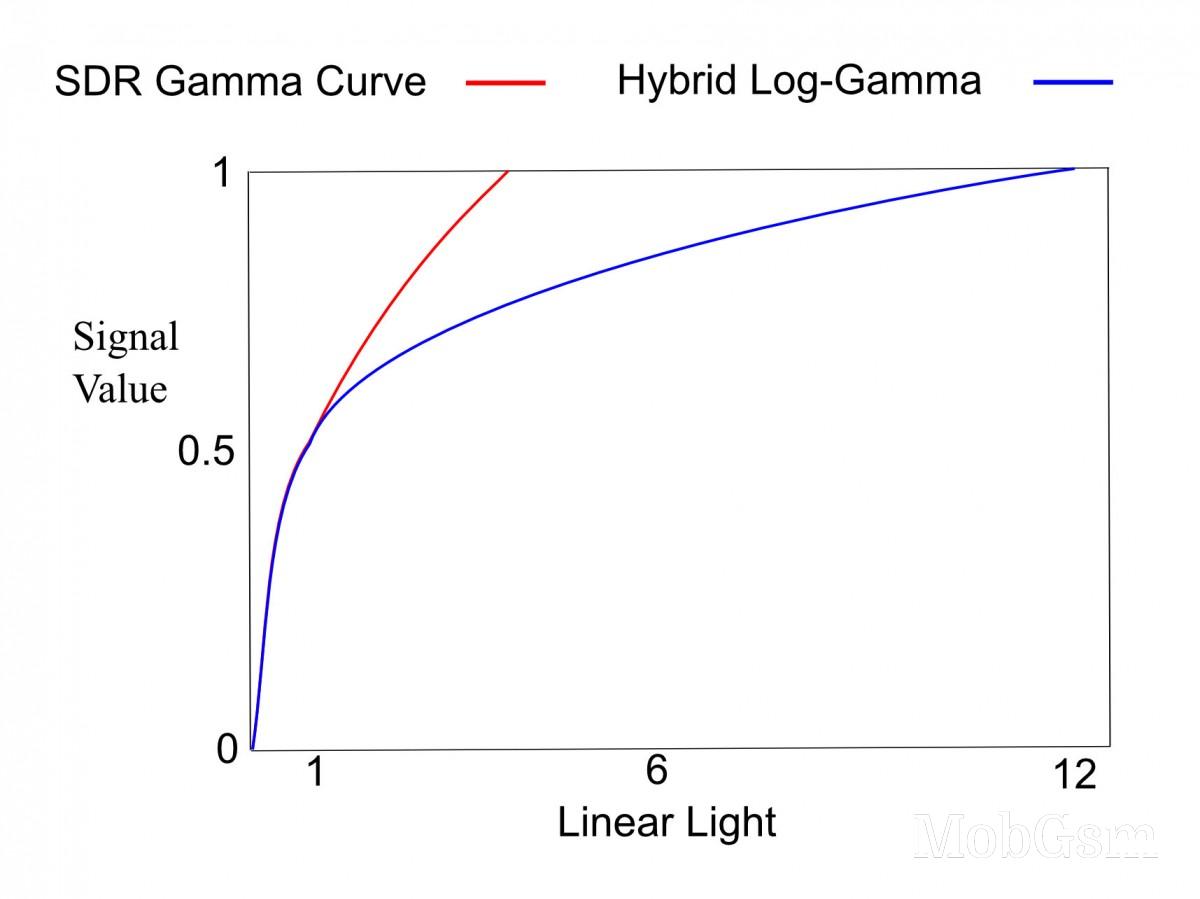

Before moving on to Dolby Vision, let"s talk about Hybrid Log Gamma or HLG. Like the others, HLG is also an HDR format but built on different technologies and for different use cases. HLG uses a non-linear electro-optical transfer function, where the lower half of the signal value uses a gamma curve and the upper half uses a log curve. This is done so that the video can be played back on any television; when played on a standard television, it can interpret the standard gamma curve of the signal value resulting in an SDR video and when played on an HLG display it can also read the logarithmic part to produce the full HDR effect.

HLG was created by BBC and Japan"s NHK for broadcasting. Standard HDR10 workflows require a lot of steps and also make it incompatible with non-HDR displays. HLG was designed to bypass that by baking all the information in a backward-compatible format at the time of transmission.

While HLG has widespread adoption in devices due to its truly royalty-free nature, the format remains largely limited to select TV broadcast channels.

Dolby Vision

Dolby Vision is an HDR format similar to HDR10 and HDR10+. It is based on the Perceptual Quantizer EOTF and is capable of 10,000 nits maximum luminance. Dolby Vision also supports 12-bit color along with Rec. 2020. It was the first HDR format to support dynamic metadata, allowing scene by scene or even frame by frame tone mapping.

Since its introduction, Dolby Vision has seen steady adoption from studios as well as device manufacturers. While HDR10 continues to remain the default HDR format, and even HDR Blu-ray discs with Dolby Vision have an HDR10 base layer with a separate Dolby Vision metadata layer, several studios and streaming services have chosen to provide their content in Dolby Vision if they are doing an HDR grade.

This is despite the fact that like all of Dolby"s services, Dolby Vision is proprietary with a royalty fee for every device that incorporates it, although it"s also not particularly high with the cost per TV being lower than $3. Of course, when you are talking about millions of devices, especially things like smartphones, you can see how that number can go up.

However, while most streaming services seem to be all-in on Dolby Vision these days, film studios have struggled a bit to include Dolby Vision on all of their new UHD releases. This has to do with the physical limitations of the disc and the requirements set by the UHD Alliance, which forces the studios to have an HDR10 base layer alongside the Dolby Vision metadata for backward compatibility with older HDR televisions, which takes up additional space. This is something streaming services don"t have to worry about as they can just serve the exact version that the viewer"s television supports.

This has caused a slowdown in the number of Dolby Vision UHD titles that have been released over the past year or so. However, some studios have continued to soldier on despite the annoyances but many others, including Disney, have decided to fall back on just releasing in standard HDR10 for physical media and reserving Dolby Vision for online streaming. Sounds counterintuitive but that"s how things are currently.

Dolby Vision as a format has several bitstream profiles and levels, which dictate some of its features and specifications. These profiles vary based on the device that they are implemented on and also the method of content distribution. So far, there hasn"t really been any reason for the consumer to be aware of these as normally it all "just works" as long as you have devices and content claiming to support Dolby Vision.

What you may have to be concerned with is Dolby"s use of two different modes. One of them is the standard mode, also known as TV-led Dolby Vision and the other is the low latency mode, also known as the player-led Dolby Vision. The difference between them is that the standard mode does all the tone mapping and processing on the television itself, which produces a better image at the cost of slightly increased latency. The low latency mode does the tone mapping on the player, which produces less accurate results but reduces latency.

For this reason, this low latency mode has been preferred by consoles like the Xbox One X/S and the Xbox Series X/S. Also, most Sony televisions with Dolby Vision only support the low latency mode, but all the other television manufacturers support the standard mode. This caused an issue with some media players that didn"t support the low latency mode initially but many of them have since been updated to support it.

Mastering in HDR

Creating and mastering content in Dolby Vision or any form of HDR can be complicated and time-consuming. It requires more and better equipment to capture and edit the footage, and greater input from the coloring artist for a final grade that doesn"t look like someone just slapped an HDR badge on an SDR grade.

One of Hollywood"s favorite cameras, the ARRI Alexa LF has a dynamic range of over 14 stops.

One of Hollywood"s favorite cameras, the ARRI Alexa LF has a dynamic range of over 14 stops.To start with, one needs a camera with a wide enough dynamic range. Fortunately, a lot of the full-frame and even some of the APS-C cameras on the market today have sensors with wide enough dynamic range that you can produce HDR content with them as long as you shoot in some form of log profile or directly in RAW. And the digital cinema cameras have had this ability for even longer. This means if you have a camera that costs over $2000, chances are it can record video that can be mastered into HDR.

Note the use of the keywords here "mastered into". We are not looking for cameras that record directly in HDR. That"s not how HDR content is made for cinema or television. The HDR you see in your movies or TV shows is all done in post-production using some really good quality footage. This allows the flexibility of producing multiple versions of HDR and also an SDR grade based on the client"s requirements.

When you capture video in log profile or RAW from a camera, you get a video that is extremely wide dynamic range (generally upwards of 12 stops) but is mostly unusable unless it is color graded. Once shot, this footage then needs to be brought onto a computer where it will be color graded and processed into the final deliverable.

For this, you need an operating system that supports HDR. Fortunately, both Windows 10 and macOS do that in their latest public versions. Then you need a color grading software that will either work alongside or as your non-linear editor. DaVinci Resolve by BlackMagic is a common choice these days but there are other options available, with Premiere Pro and Final Cut Pro X also supporting some level of HDR workflows.

The Sony BVM-HX310 has legendary status among reference monitors. It also costs around $40,000.

The Sony BVM-HX310 has legendary status among reference monitors. It also costs around $40,000.Lastly, you need a reference HDR monitor. This display needs to support at least 1000 nits of sustained brightness, 200,000:1 contrast ratio, and full DCI-P3 coverage (even though the container for HDR is Rec 2020, the mastering is still done for P3). OLEDs, microLEDs, or dual-layer LCD are great but not necessary. You can get away with a sub-$5000 monitor if all you"re doing is grading for your YouTube or Vimeo channel. However, if you are a professional color grading the next Disney blockbuster, then you would be using a reference HDR monitor that costs tens of thousands of dollars.

Since we are in an all-HDR environment now, we now have a wide dynamic range and wide color space to work with the content. This means you are now able to view and retain more of the dynamic range that the camera captured on the screen and in the final grade. Brighter highlights will show up better without clipping as long as the image was correctly exposed and the scene was within the camera"s dynamic range. You can also retain more of the color information from the capture; most high-end or cinema cameras can record in 10-bit or even 12-bit natively in 4:2:2.

How the grade itself goes depends upon the creative director"s intent. This is something that has come into contention recently after people watched the HDR masters of popular movies and realized that the HDR master doesn"t look meaningfully different than the SDR grade. Some other people, including some colorists and cinematographers, have come out in the defense of this practice, calling it the creator"s intent and that the directors and cinematographers should have the final say in the look of the image and if they don"t want to include bright highlights or the wide color gamut of HDR then they don"t have to. This brings into question why even use HDR in that case and if this is being done purely for marketing but that"s a discussion for another day.

Once the grade is complete, the final steps depend on the format that it will be delivered in. If you are delivering it in Dolby Vision, which is usually the case these days, the final grade first needs to be analyzed by the editing software to generate the dynamic metadata. You now have the primary or "hero" grade, which will be used to produce the other versions.

This Dolby Vision grade is then passed through a CMU or content mastering unit and passed through to an external SDR monitor calibrated to 100 nits to produce the SDR version from the hero grade. Here, the coloring artist will do what is known as a "trim pass", where they will go through the content again and see how it looks like on an SDR display while making any required changes along the way. Producing an SDR grade this way from the initial HDR grade is now the standard industry practice and how companies like Netflix require you to submit your content to them. The Dolby Vision master can also be used to produce a generic HDR10 deliverable if that"s what your client requires.

After this, the content goes through the final stages of quality control and along with the metadata is sent off for delivery. This is how HDR content today is captured and mastered for physical and streaming media. If you have to make an HDR video today for, say, YouTube, the process would be similar other than having to export in HDR10 only as YouTube does not support HDR10+ or Dolby Vision currently.

It"s worth noting that while we have referenced HDR10 and Dolby Vision as "formats" in this article, they are not different codecs or containers. The HDR information is added as metadata on top of the existing SDR video, and the video itself can use any of the existing codecs such as H.264, HEVC, VP9, or anything else that might come out in the future. Having said that, UHD Blu-ray does use HEVC as its format of choice, as do many of the streaming services for their 4K HDR streams. This doesn"t mean you have to have HEVC support for HDR, just that it"s the format the industry chose to go with.

HDR on smartphones

HDR video support on smartphones has been hit or miss over the years. Until very recently, it was only limited to the consumption of HDR content but now you can also create content in HDR on some phones.

In terms of consumption, we have seen varying levels of success. In most cases, phones that claim to support HDR can decode and playback the content without messing up the colors. However, many of these devices will have an LCD or an OLED without an especially high brightness ceiling so the experience is often no better than watching a standard dynamic range video, albeit with wide color. In some cases, even the latter isn"t a given.

Most smartphones also support just HDR10, with very few supporting HDR10+ and even fewer supporting Dolby Vision. HDR10+ is largely irrelevant but if you are a Netflix subscriber, you are missing out on a lot of Dolby Vision content and having to fall back on the less impressive HDR10 version. Apple is the only smartphone manufacturer that is all-in on Dolby Vision across its entire iPhone line with none of the Android smartphones on the market today supporting this format.

However, Apple is also the one that has been pushing out "fake HDR" devices for years, since the launch of the iPhone 8 series. All of its LCD iPhones and several of the iPads since have had support for HDR10 and Dolby Vision in software but no actual HDR displays. Only the OLED iPhones have displays capable of HDR. What the OS then does is tonemap the light levels to fit within the dynamic range of these non-HDR displays, which often results in a dark image and poor contrast. You can see this in apps that support HDR, such as Netflix or Apple TV, where the fake HDR content actually looks worse than the SDR version of the same content. And since there"s no way to disable it, you are pretty much stuck with it.

As for capturing in HDR, we have had smartphones for the last couple of years that have been capturing and saving video directly in HDR10 (HDR10+ in the case of Samsung phones). This video is fully color graded just like the SDR video, so you don"t have to do anything to it and can be watched on the phone or any other HDR-compatible device.

Apple decided to join the fray with its iPhone 12 series recording in Dolby Vision. We don"t think it is especially impressive that the iPhone 12 can produce videos in Dolby Vision as opposed to some other HDR format but it is impressive that it is doing it at up to 4K at 60fps (30fps on non-Pro models) and that it lets you edit it afterwards on the phone itself.

Having seen these videos, we have mixed reactions. While the videos do have greater dynamic range and contrast and do show better detail in the highlights along with less banding, the impact of HDR will vary based on the scene. Also, because the color and luminance grading is essentially done by software using data from phone camera sensors, don"t expect results as impressive as from cinema camera footage graded by professional colorists. But for what it"s worth, they look quite decent.

The issue currently is with sharing, as the HDR footage isn"t compatible with most online sharing services. If the phone records in standard HDR10, you could still post it to YouTube or Vimeo as they do support HDR. However, there"s not much you can do with the Dolby Vision video right now other than watch it on your phone. If you share it, the phone will automatically strip it of its metadata and turn it into an SDR video.

It"s difficult to say when other services will support HDR, if at all. They can"t just flick a switch for a format that few people can support on their devices and even fewer who can experience it correctly. We think it could be a while before something like Twitter gets support for Dolby Vision video.

HDR in photography

The term HDR has been used in photography for years now, even before it was introduced for video. The name was given to the technique of stacking multiple images of different exposures, thereby combining them into a single image that had a "wide" dynamic range. However, the final result isn"t exactly wide dynamic range and was designed to cheat around the limitations of print and displays of that time.

The technique of stacking images of different exposures is called tonemapping. It allows you to get detail from both shadows and highlights in a single image. The final image is still a standard dynamic range, as you aren"t exactly experiencing the full range of light information captured in the image but it served the purpose of just being able to see everything in the frame without being over or underexposed. It also got around the limitations of early digital cameras, which had notoriously worse dynamic range than film.

This technique is still used on cameras, especially on smartphones, and is still referred to as HDR. However, this is not the same HDR as the one we have been talking about in this article, as it doesn"t use a different EOTF nor does it have any technical differences to a standard digital photograph.

However, there are now cameras that can capture images in HDR PQ. This is the same Perceptual Quantizer EOTF that we talked about for HDR video, which means the still images actually are capable of storing higher luminance values, which when viewed on an HDR display show brighter highlights and thus an actual wide dynamic range.

The cameras that can do this are the Canon EOS 1D X Mark III, EOS R5, and the EOS R6. The images are saved in the HEIF or RAW and can only be viewed correctly on an HDR-compatible display and device. We will likely see more devices adopt this technology in the future, including smartphones, but again there will be limits on sharing in this format for some time.

HDR in video games

We have seen HDR PQ be used in video and even in photos but HDR is also slowly growing in popularity in games. Once again, it uses the same HDR PQ algorithm to produce wide dynamic range images and the output can be in any of the popular standards, including HDR10 or Dolby Vision.

Since games are basically made from scratch and every element is bespoke, adding HDR to a game is easier as you just have to assign a different light value to a color or texture than you would for the SDR version. The trick is to not have it be distracting or overwhelming but when done right, can be greatly impactful on the overall experience, especially in story-driven titles. You also get to benefit from the increased color range that games can exploit much better due to their often fantastical settings that don"t need to look realistic like film or television.

HDR has seen some adoption in console games as televisions usually handle HDR well. However, it"s not that common for PC titles because Windows still has a substandard implementation of HDR that requires you to switch to a different HDR mode from the settings and then makes all non-HDR elements on-screen look weird. Cheap HDR monitors are also generally quite horrible so the overall experience often leaves a lot to be desired. This is likely why HDR hasn"t taken off in gaming as much even though it has incredible potential in this area.

Limitations of HDR

While HDR as a technology does not have many limitations of its own, it has proven to be somewhat difficult to implement and is largely been held back by the hardware available today.

HDR is very demanding on the hardware. The current specifications of the technology aren"t even possible with today"s commercially available displays, with both the color space coverage and especially the peak brightness being well out of the reach of what is viable. In some ways, this is good as the HDR standard itself does not have to evolve much over the years as the hardware will take some time to catch up and even existing content can look better with future hardware. However, it does mean that as of today, we cannot experience HDR the way it is meant to be.

Even within the hardware available today, there is a massive discrepancy in what you can experience and it depends on a variety of factors. Price is by far the biggest one, with the cheaper HDR displays simply being incapable of showing the full potential of the technology. Many of the budget televisions, monitors, and even smartphones claiming to support HDR only have the requisite software support to be able to play HDR PQ content. The actual hardware on these often cannot achieve high enough brightness, contrast, bit depth, and color gamut coverage to provide an experience that is in any way meaningfully better than SDR.

The different display technologies have also resulted in another layer of disparity in HDR performance. Self-emitting panels like OLED are ideal for HDR as they can control the light output on a per-pixel level, leading to localized brightness and infinite contrast. However, OLEDs are limited in their light output by temperature and power concerns and thus they don"t have a particularly high peak light output and even that value cannot be sustained for too long.

The Panasonic OLEDs are considered best in class despite the inherent limitations of OLED.

The Panasonic OLEDs are considered best in class despite the inherent limitations of OLED.On the other hand, backlit panels like LCD can get immensely bright and can sustain that brightness for much longer. However, even with local dimming, LCD panels do not have localized light output at the same per-pixel level as OLED, which reduces their contrast. Due to this, videophiles today prefer to go with OLED if they can afford it even with concerns over brightness and burn-in, as the per-pixel light output is still the best way to experience HDR.

HDR experience on computers also leaves a lot to be desired, especially on Windows. On a media player or a game console, you usually consume the HDR content fullscreen, so the device doesn"t have to worry about rendering SDR and HDR elements at the same time and can just switch back and forth between them. On a PC, you can have HDR content playing in a video over an SDR desktop, so it needs to handle both formats gracefully. Windows has traditionally struggled with even just being able to have two different color spaces active at once, so it"s hardly a surprise it falls flat on its face handling HDR inside an SDR desktop environment and requires you to force HDR for the entire screen, making the non-HDR elements look odd. macOS generally handles this better but then again Apple has always been better at color management.

As alluded to earlier, mastering and distributing HDR has also not been the easiest process. Studios now have to produce two versions of their content if they choose to support HDR, which increases the time put into post-production. Not to mention the specialized equipment required to capture, master, and distribute the additional files. And there"s definitely some HDR content out there that hasn"t been mastered correctly or is just an SDR grade inside an HDR container. Such content could leave a bad taste in your mouth if it"s your first time experiencing HDR and may cause users to undermine the technology.

Final thoughts

HDR is one of the greatest advancements in video technology, of that there is very little doubt. The improvements it brings to the dynamic range and color adds dramatically to the realism and makes the content that much more immersive. Most people agree that HDR has a far greater impact on image fidelity than a bump up in resolution. Like high frame rates, it"s something you don"t need to be an expert to notice.

However, we are still in the early days of this technology. The hardware required to correctly display HDR isn"t commercially available today and it"s going to be a long time before it becomes affordable to the masses. That"s not to say you can"t have a great HDR experience today; pick up an LG or Panasonic OLED, stick an Oppo 203 in there (if you can still find one), hook up an honest to goodness surround sound system (none of that soundbar nonsense) and you are in for a treat. And if you can experience this today without robbing a bank, imagine how good it"s going to be in the next ten years.

But if you don"t have an HDR-compatible television or a smartphone, that"s fine too. As good as HDR is, traditional video can also be very good. Just pick up an HD Blu-ray and you"ll be amazed at how good even standard 1080p video can look. We think it"s better to experience good SDR than poorly rendered HDR on a cheap TV set. And we honestly don"t think anyone should care much at all about being able to record HDR video on their phone, especially if you like to share those videos with others.

However, we are deeply fascinated with what the future holds for this technology and can"t wait for great quality HDR televisions at affordable prices as well as greater support for content and online services for sharing content in HDR. Maybe things will be a lot better when we decide to do our next article around HDR. But that"s all for now. If you have any further questions then you can leave them in the comments below. We hope this article was helpful or insightful in any way and helped you understand or at least sparked a curiosity within you so you could look into it further and make a more informed purchase decision in the future.