OpenAI announces Sora, its text to video-generative AI model

OpenAI announced its newest diffusion model Sora which harnesses the power of text to video creation. The newest AI model from the ChatGPT maker is capable of generating videos in various resolutions and aspect ratios and can also edit existing videos allowing for a quick change of scenery, lighting and shooting style all from a text prompt. Sora can also generate videos based on a still image or even extend existing videos by filling in missing frames.

OpenAI shares that Sora is currently able to generate up to a minute of Full HD video content and the examples we’ve seen look promising. You can check out Sora’s landing page for more generated video samples.

Sora can generate complex scenes with multiple characters, specific types of motion, and accurate details of the subject and background. The model understands not only what the user has asked for in the prompt, but also how those things exist in the physical world.

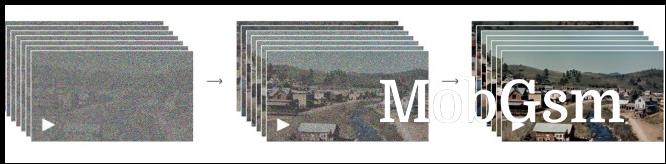

It works by using a transformer architecture similar to ChatGPT where videos and images are presented as smaller units of data called patches. Videos generated by Sora start as static noise with the model gradually removing noise to form the final product.

Noisy input patches transformed to high quality video

OpenAI shared it is leveraging its existing safety protocols used in DALL·E 3. Sora is currently being tested by “red teamers” - experts who will carry out tests and asses the model for potential risks ahead of its official launch.

OpenAI will also conduct talks with policymakers, artists, and educators to see potential concerns and use cases for Sora. There’s no official launch date provided for now.